-

“Yes…but.” — Saving ideas in their infancy

“Yes…but.” — Saving ideas in their infancy

Photo by Mika Baumeister on Unsplash Ideas are fragile. In an idea’s infancy, it doesn’t take much to do irreparable damage to it. This is why how we approach new ideas and the language used during an idea’s infancy is so important.

One of the biggest killers of an idea is the tendency to front load the idea conversation with all the reasons an idea can’t succeed.

“It won’t work. We wouldn’t be able to know ahead of time all the inputs necessary.”

“It won’t work. Processing time would take too long.”

“It won’t work. These two systems can’t talk to each other.”

All of these are examples of idea killers. But they’re only idea killers because of the wording and the way we deliver the objection. Imagine if instead, we approached the idea from a perspective of curiosity and a commitment to the idea. Let’s add a few simple words to these idea killers and see how they seem afterwards.

“Yes, but we’ll have to keep processing time to under an hour.”

“Yes, but we’ll need to figure out how to anticipate all the inputs.”

“Yes, but we’ll need to figure out how to communicate between these two systems.”

By just changing the way we speak and challenge the idea we’ve done a couple of things.

- We’ve noted the potential problem without killing the idea.

- We’ve aligned ourselves with the idea giver as an ally. We’re now working towards a solution together.

Being able to align yourself with the solution puts you in a completely different mindset in terms of how you approach a problem. It doesn’t mean that the problems presented aren’t real and they very well might be insurmountable. But now you’ve explored the idea, supported the idea giver and most likely have come up with alternative methods that partially solve the problem.

The “Yes, but” approach is increasingly more important in remote working environments. Text based conversations operate on a different level of social interaction. Text based conversations are devoid of the non-verbal communication we often give, even subconsciously.

Even though our texts are devoid of these non-verbal cues, our minds haven’t adapted to the fact that we’re not sending them. Text based messages go out devoid of the signals that might soften your communication style. Instead the recipient is stuck, interpreting your message with energy you may or may not have intended. This is particularly true of messages around ideas.

If a person is already timid about sharing their idea, any critical comments about the idea will be interpreted in the worst light. The simple action of “Yes, but” can help to align yourself and provide support for your team member while still bringing up real world challenges.

The literal worlds “Yes but,” don’t have to be used. The key is to do the following:

- Acknowledge and validate the idea.

- Address the problem as a solvable hurdle to the idea.

- Word things so that it is clear you plan on collaborating on the solution for the idea.

Just these three steps can help save a lot of ideas and help them get out of their infancy and into full-fledged solutions to help you and your team.

-

Troubleshooting Computers Made Me a Better Patient

Troubleshooting Computers Made Me a Better Patient

Unfortunately, I’ve spent the last few days hospitalized. It doesn’t appear to be serious, the type of mundane virus or bacteria that can wreak havoc on the immunocompromised. But my stay here has given me an opportunity to watch the troubleshooting process in a different field. The art of troubleshooting isn’t an innate skill. It’s one that crafts-people develop over time. They can think about the process, they can articulate how they go about it and their process sets up an easy way to refute bad theories or continue investigating theories with a lead.

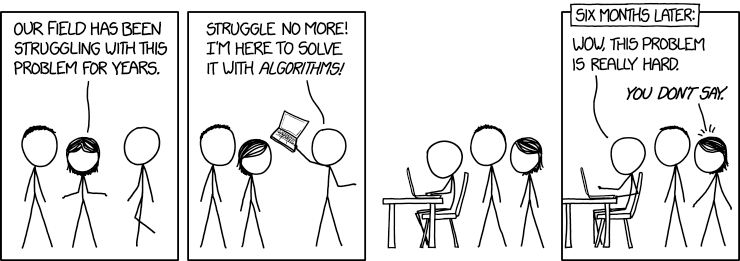

I’ve always had this issue accepting that where I work is a smaller representation of society as a whole. “Not everyone at work is a great troubleshooter, but that’s not the case in medicine!” Unfortunately, I am wrong. But if you can abstract your troubleshooting process, I assure you it can be applied to any field. Anywhere.

Systems are Complicated, Break Them Up

If you’ve ever had to troubleshoot a large complex system, I think one of the things you find out is that it’s almost impossible to do. What you don’t consider is that with enough examination, any system is likely large and complex. It’s the distance you’re viewing it from that makes its complexity visible.

When you come into a problem, the first thing you have to think about is how that problem breaks up into different spaces or components. Where are the hand-off points and at what moment can we determine where the cause lies. Sometimes you do this innately when you’re well-versed with a system, but when you get into systems you don’t know you often ditch this specific skill. For example, when you’re troubleshooting your web browsing what are the things you check for?

- Am I connected to the Internet? Meaning, can I get anywhere on the web?

This makes you divide the problem in half. If you can’t get to the Internet at all, you’re now thinking that your focus should be on things that are necessary beforeconnecting to the Internet. You might do ask questions like:

- Can I connect to other machines on my local network?

- Can I resolve DNS hostnames?

- Is my router working?

Answering these questions not only moves you steps closer to figuring out the answer, but it also helps you eliminate other theories. Your friend says “I can’t get to that website either!” That’s fine. But you can’t get to any website. So obviously you and your friend have two different issues. Once you solve your issue with Internet connectivity, you might be on the same page, but for now you’ve got your own sets of problems.

It also helps you to gauge how many problems you might be dealing with. In addition to the Internet connectivity problem, let’s say you have an issue launching Power Point. It throws a weird cryptic error.

- Is it possible that the Internet error is causing this?

- Have you seen this same error before with Internet connectivity?

This helps set the stage for future questions about symptoms that are happening at the same time but might have different sources. It might be easy to conclude “Fix the Internet and PowerPoint should start working.” But if you know that PowerPoint isn’t dependent on the Internet, then it could be a bad idea to tie these things together.

After you break them up solve the individual problems

Once you break the systems up, you might have a bunch of separate problems. If you’re well-versed in the system, you might be able to separate the problems into contributing and non-contributing issues. For example, our PowerPoint issue isn’t something we need to solve, because we know it isn’t contributing to our core problem.

You have to order the problems in order to solve them in the correct order. If you don’t have Internet connectivity at all, then it doesn’t make sense confirming that you can resolve server names. If you solve the Internet issues, what are the things you’re expecting to clear up? What does it mean if they don’t clear up? What are the next steps for that?

Before you begin solving the individual problems, have a theory on what solving that problem should do to the system. What symptoms are you expecting to clear up? What does it mean if specific symptoms don’t go away and how does that alter your troubleshooting plan?

You can use this in everything

I used technology in my examples because it’s what I know. But if you think abstractly you can use these same processes to generate questions about your car repair, your home fixes, your medical care.

“What do we think are causing these symptoms? Can we test for that? If we confirm that’s the cause how do we treat that? How soon do we expect symptoms to subside? If they don’t subside what could that mean?”

When you can stop being a passenger in these sorts of engagements, it can help you feel more confident about the outcomes, more reasoned in your decision making and more at peace with whatever money you’re shelling out.

-

Decision Rights — Communicating How Choices Get Made

Decision Rights — Communicating How Choices Get Made

Advice for leaders

Photo by Beth Macdonald on Unsplash Companies seldom have issues generating ideas and paths forward on problems. In fact, you almost get too many ideas to be able to sift through, evaluate and ultimately implement. But a bigger problem teams have isn’t generating options, but choosing which option to go forward with. (The next issue is aligning resources, but that’s a future topic) The issue of deciding on a course of action is further exacerbated when we have groups of people working on a problem with no clear idea of who the decision owner is.

At Basis Technologies we follow many of the principles and practices of the Conscious Leadership Group. The trainings we’ve had have been invaluable for me as a leader, but one of the best takeaways so far has been the concept of Decision Rights. In a nut-shell, decision rights are a clear definition of who will make a decision for any given situation. I’ve been using decision rights a lot more now that my team has grown and we’re spread out across three countries. The benefits of easy informal communication have begun to disappear as my organization grows and I needed something more formal to ensure the team is on the same page.

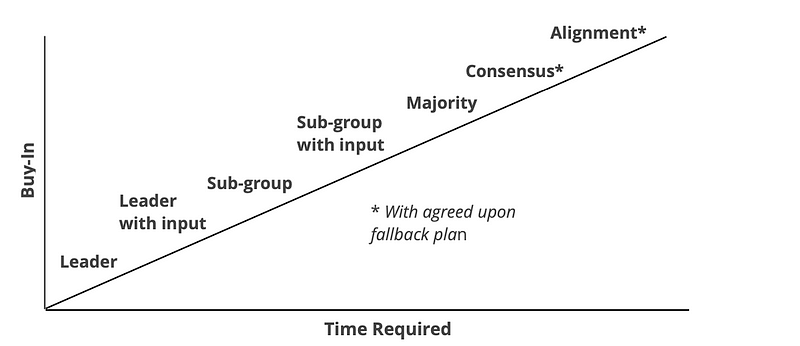

There are a total of 7 decision rights with each change in decision rights also increasing the amount of time required to reach the decision and the amount of buy-in received from the group. This will make a bit more sense as I walk through the 7 decision rights really quickly.

Decision Rights

- Leader — We are all pretty familiar with the leader decision right. It’s just like it sounds, the leader of the group (assuming there’s one clearly defined) will make the decision on his/her own.

- Leader with Input — The leader will gather input from others before making their decision. The leader usually will be responsible for soliciting the information they feel is necessary for input to their thought process.

- Subgroup — A small team of people are put together to make a decision. The team is typically made up of Subject Matter Experts (SMEs) that drive all aspects of the decision making process.

- Subgroup with input — A subgroup with input means that the subgroup still decides, but solicits information from outside the group as well, before making a decision.

- Majority Vote — A group discussion of some sort ensues, followed by a voting period. Everyone should have an opportunity to voice their thoughts and concerns prior to voting. Think of it like an election, where each candidate (or view point in our scenario) has an opportunity to discuss their feelings on the situation. After that period has finished, voting occurs and majority wins. The leader of the group or organization will decide what type of majority is needed, whether it be just a simple majority, two thirds majority etc.

- Consensus — Consensus happens when there is nobody that is opposed to the decision being made. It doesn’t mean that they can’t have doubts or reservations, but they’re not actively against it. This is your classic dinner planning scenario. You’re not against steak, even though you’d rather have pasta, but the rest of the group wants steak and you can get behind it. But just like your dinner plans, consensus decisions can be difficult to reach, so it’s wise to have a fall back decision right in the event you can’t reach consensus. Any of the previously mentioned decision rights can work, but I typically fall back to Leader with Input.

- Alignment — Alignment is when every one is in full support of the decision being made. There is no dissent. This is the hardest of all the decision rights to meet. If you can’t get alignment on dinner, getting alignment on transformational change can be very hard. Like the consensus decision right, it’s important to have a fall back decision right in case alignment cannot be reached.

With these 7 decision rights laid out, we can talk about buy-in vs time. Depending on the urgency of the decision that has to be made, some decision rights might seem more obvious than others.

Decision Rights Graph — from the Conscious Leadership Group In the above image you can see the various decision rights plotted on a graph, with the axis being buy-in and time. These are typically the two constraints that we’re working with when it comes to decision making. Buy-in can be incredibly important for large transformational change, but time may be of the essence when we’re trying to make a decision about something this quarter. There is no right or wrong answer for choosing a decision right. It all boils down to the situation at-hand and the constraints that you’re working under. If you’ve got more time, achieving more buy-in makes sense which can lead you to decision rights like Alignment or Consensus. Any decision typically benefits from a larger buy-in from the team. The problem is not every decision has the time necessary to get there, which makes some of the other decision rights attractive and appropriate for the situation at hand.

Communicating Decision Rights

I don’t use formal communication for every decision right in our team. I typically reserve it for broad impacting decisions that I know many people will have feelings about. I also don’t discuss decision rights when it’s Leader decides, because then it’s usually quite clear. It’s a terrible feeling thinking you’ve got input on a decision when you don’t. If I come to the team with a decision already made, I make that clear with the delivery of the decision. Again, this is a rare occurrence, but it does happen.

When I am communicating decision rights, it’s usually in this old, antiquated form of communication called a “Meeting Agenda”. Some of you may remember those from back in the day when it was important to know what you were going to be spending 1.5 hours of your life on. In the meeting agenda I try to lay out a few things.

- What the decision to be made is

- The timeframe allotted to make the decision (often just the length of the meeting)

- What the decision right is

- What the fallback decision right is (if applicable)

- Any tools being used to generate decision options (Brainstorming, Pros vs Cons, 6 Hats, etc)

With these points in hand, everyone clearly understands their part in the decision-making process and how decisions gets made. Even if people don’t agree with the final decision, the process to which it came into being is well-understood and transparent. The agenda doesn’t even have to be super-exhaustive. Here’s an example agenda I’ve used in the past.

Lets get together and discuss namespaces moving forward.

Problem: We need to decide if we’re going to have a single namespace per environment or if namespaces will be broken down by application. Single namespaces are advantageous in test environments, but can become unruly in production. Multiple name spaces can complicate environment management and will force specific patterns of referencing apps in pods, since you’ll have to use the FQDN. None of these are hard, but we need a specific choice.

Meeting Outcome: Decide on single name space or multiple name spaces. Decision Model: Group Consensus with a fall back of Leader Decides with input from staff, if we can’t come to a consensus.

Pros/Cons Idea Boardz

It’s just enough information to get everyone on the same page without taking tons of times crafting time blocks that probably won’t be honored anyways. (Although if you’ve got the time for a more detailed agenda it’s totally worth it. I’m just saying that there’s still value in a brief agenda if the alternative is no agenda at all)

Wrap-up

Decision rights can be a tricky thing once you’ve moved beyond the leader decides tier. Making sure people involved and impacted by the decision are aware of how decisions are made can lead to greater buy-in even after the decision has been made. Communicating these decision rights can be a huge boost to involvement and productivity. It also forces you to make rationale decisions about the two constraints of every decision, time and buy-in. The more time you have the more you can invest in getting larger buy-in. But if time is short, you may have to make due with less buy-in so that you can get the ball rolling on whatever decision you make. These pressures exist whether you acknowledge them or not so its better to go into the situation eyes-wide-open. It can even help you communicate why such a narrow buy-in was chosen. Not every decision has a time-horizon that can afford alignment or even consensus. Does this mean some decisions are sub-optimal? Of course. But a sub-optimal decision is way better than no decision at-all.

If you’d like to further dive-in or see a visual representation of Decision Rights, I’ve linked a video below and I encourage you to checkout the Conscious Leadership group.

-

Terraform, AWS, and Idempotency

Terraform, AWS, and Idempotency

A bug in Terraform? Or a misunderstanding of how a particular stanza works? Or maybe even our own automation around Terraform?

Photo by James Harrison on Unsplash Hopefully, this is only part 1 of this series as it doesn’t really have a satisfying ending so far, but still a story worth sharing. We encountered an error in Terraform that was transient but seemed to go away on its own, most likely some race condition. This post is going to walk through that failure. It’s a bit more technical than some of my other posts so those who come for the leadership thoughts might have their eyes glaze over. Your mileage may vary.

A bit about our deployment process first. We use a blue/green deployment strategy in our environment (minus the database). Automation around Terraform is responsible for bringing up the second application stack and deploying code to it. During the creation of that second stack, we received an error message we had never encountered before.

Error: error creating EC2 Launch Template: IdempotentParameterMismatch: Client token already used before.

status code: 400, request id: 4c6edce7-7497-4884-ab63-f215f9b82f6e

on ../terraform-asg/main.tf line 33, in resource "aws_launch_template" "launch_template":

33: resource "aws_launch_template" "launch_template" {There were a few things that needed to be researched here.

The IdempotentParameterMismatch Error

When an action is idempotent, it means it can be performed multiple times without changing the result beyond the initial application. I

n practice what this usually means is if I run command X, the command is aware if it had been run before in this context and may skip the usual application of the command and instead return a status or a result. A simple example is the

mkdircommand when you use the -p flag (which tellsmkdirto create the full path if it doesn’t exist).If I run that on my local workstation the following happens.

mkdir -p /tmp/test

ls -l /tmp/|grep test

drwxr-xr-x 2 jeff.smith wheel 64 Jun 9 06:31 test

mkdir -p /tmp/testThe

mkdircommand created the path/tmp/testand we can see it was created successfully. When I run the command again, it completes successfully with no error message.That makes this command idempotent. No matter how many times I run this command, I know in the end I’ll get a successful result and that the path

/tmp/testwill exist. Now contrast that with justmkdir.mkdir /tmp/test2

ls -l /tmp/|grep test2

drwxr-xr-x 2 jeff.smith wheel 64 Jun 9 06:35 test2

mkdir /tmp/test2

mkdir: /tmp/test2: File existsNow with mkdir, on the second execution I get an error message, which is a different end result than my first execution. The directory exists, but the error code I get back is different. That makes this a non-idempotent operation. But why do we care? Well in the second example I need to do a lot more error handling for starters. And this is a basic example.

When doing something like creating infrastructure, it could result in launching more instances than you intended, which is what this

IdempotentParameterMismatcherror is designed to prevent.When you make an AWS API call to create infrastructure, just about every endpoint (to my knowledge) does this is in an asynchronous fashion. What this means is your API call returns immediately but the work of actually creating the infrastructure you requested is still in progress. Because of this you typically need some sort of polling mechanism to determine when the operation has been completed.

Several AWS API endpoints support idempotency, which allows you to specify a client token to uniquely identify this request. If you make the same API infrastructure creation call and use the same client token, instead of creating a new instance, it will return the status of the previously requested instance. When creating infrastructure programmatically this can be a big safety net to avoid creating many copies of the same infrastructure. And that’s where our error comes in.

The error is stating that we have already used the client token. Digging into the documentation a bit, a more specific meaning is that we changed parameters in the request but reused the client token, meaning the API doesn’t know what our intent actually is. Do we want new infrastructure with these parameters? Or are we expecting already existing infrastructure that matches those parameters? To be safe, it throws this error.

The user is always responsible for generating the client token. But in this case, the user is actually Terraform. That created some surprise and relief on our part since it meant it most likely wasn’t any of our wrapper automation. But we still needed to be sure. The first thing we did was an attempt to find what client token was used and was it actually used twice.

Luckily the error gave us a

RequestIdwhich we used CloudTrail to look up. In therequestParametersfield of that request we were able to find the ClientToken used."requestParameters": {

"CreateLaunchTemplateRequest": {

"LaunchTemplateName": "sidekiq-worker-green_staging02-launch_template20220510183630312700000005",

"LaunchTemplateData": {

"UserData": "",

"SecurityGroupId": [

{

"tag": 1,

"content": "sg-0743bcbd08cadba1d"

},

{

"tag": 2,

"content": "sg-6daf421e"

},

{

"tag": 3,

"content": "sg-0818108e24803a418"

}

],

"ImageId": "",

"BlockDeviceMapping": {

"Ebs": {

"VolumeSize": 100

},

"tag": 1,

"DeviceName": "/dev/sda1"

},

"IamInstanceProfile": {

"Name": "asg-staging02-20220510183628901400000003"

},

"InstanceType": "m4.2xlarge"

},

"ClientToken": "terraform-20220510183630312700000006"

}It looks sufficiently random in the same format that Terraform often uses to generate random values.

It definitely didn’t appear to be something we generated. We then decided to search all requests in that time frame to see if any of them had the same client token.

Sure enough, there was a second request made that reused the same Terraform module (which we wrote) to generate a second ASG and launch template.

"requestParameters": {

"CreateLaunchTemplateRequest": {

"LaunchTemplateName": "biexport-worker-green_staging02-launch_template20220510183630312700000005",

"LaunchTemplateData": {

"UserData": "",

"SecurityGroupId": [

{

"tag": 1,

"content": "sg-0743bcbd08cadba1d"

},

{

"tag": 2,

"content": "sg-6daf421e"

},

{

"tag": 3,

"content": "sg-0818108e24803a418"

}

],

"ImageId": "",

"BlockDeviceMapping": {

"Ebs": {

"VolumeSize": 200

},

"tag": 1,

"DeviceName": "/dev/sda1"

},

"IamInstanceProfile": {

"Name": "asg-staging02-20220510183629183700000003"

},

"InstanceType": "m5.xlarge"

},

"ClientToken": "terraform-20220510183630312700000006"

}As you can see, there are differences in the request, but the client token remains the same. Now we’re starting to freak out and think maybe it is our code, but we still couldn’t see how.

Generating the ClientToken

As I mentioned previously, generating the client token is the job of the user from AWS’ perspective. From our perspective, that user is Terraform. We’re not GO experts by any stretch of the imagination on our team (although we’re looking for a few good projects to take it for a spin. We have a lot of interest).

But in order to understand how the client token gets generated, we were going to have to look at the Terraform source code. After a little digging, we came across the code living in the terraform-plugin-sdkas a helper function.

func PrefixedUniqueId(prefix string) string {

// Be precise to 4 digits of fractional seconds, but remove the dot before the

// fractional seconds.

timestamp := strings.Replace(

time.Now().UTC().Format("20060102150405.0000"), ".", "", 1)idMutex.Lock()

defer idMutex.Unlock()

idCounter++

return fmt.Sprintf("%s%s%08x", prefix, timestamp, idCounter)

}In this function, the author is generating a timestamp accurate to the second. It’s possible that multiple executions could hit in the same second-time span, but the value also gets a counter appended to it.

The counter is in a mutex so the value of

idCounteris shared across executions and the mutex prevents concurrent execution. There should be no way that this function generates the same client token twice. But that doesn’t mean that the function calling for the client token isn’t storing it and possibly reusing it.Wrap Up

This is where our story ends for the moment. We started to look into how and where the client token was getting used, but since we felt strongly that this was going to be related to a Terraform issue of some sort, we had to shift gears for a solution. We weren’t going to run a custom patched version of Terraform. We weren’t going to upgrade on the spot. And we weren’t going to wait until a PR got approved, merged, and released, so that put us on a different remediation path.

Our current fix was to specify a

depends_onargument for the two resources in conflict. Other times when the error happened, we noticed it was always these two resources in conflict, so the hope was that thedepends_onflag would prevent these from being created in parallel.So far that hope has paid off and we haven’t seen the error in any environment again. But we plan to continue to research the issue out of nothing more than morbid curiosity. It might lead us to a bug in Terraform, a misunderstanding of how a particular stanza works, or maybe even our own automation around Terraform.

Who knows? If we find it, you’ll be sure to find a Part 2 to this article!

-

I think the meeting and the medium for the meeting can be separated.

I think the meeting and the medium for the meeting can be separated. Yes, meetings are generally unproductive because people don’t respect the true cost of a meeting. If the hourly rate of a meeting was deducted from the organizer’s pay, they would probably treat it with more respect. But I don’t think meetings are inherently bad. What would be the tool for gathering input, feedback and decision making look like? Back and forth on a confluence document? After 4 exchanges it usually ends up in a meeting. Which is not uncommon for me to use as a benchmark. Try to solve things via email or some other medium, but after X many exchanges, it moves to a meeting with a clear agenda, decision to be made, decision rights (who makes the decision?) and method of getting to that decision. (Brainstorm, Pros/Cons exercise, 6 hats etc)

-

Inbox Zero

Inbox Zero

Photo by Krsto Jevtic on Unsplash Inbox Zero is another one of those productivity hacks that you hear a lot about in tech circles. For those of us with an unread message count in the thousands, it sounds like a far-off intangible dream like faster than light travel or sensible gun laws.

But after doing inbox zero for a few years, I’m here to tell you that the dream can be had! Inbox Zero is achievable if you remain focused and disciplined.

What is Inbox Zero?

Inbox Zero is an email management strategy dedicated to keeping your mailbox from reaching the levels of insanity where you simply give up on any hope of actually managing it. In your despair, the unread message badge sits on your mailbox as a scarlet letter, informing those around you that you’re as disorganized as you feel. The goal of inbox zero is to get your mailbox to empty at the end of every day.

That sounds like a heavy lift, but the secret to inbox zero is that you don’t actually have to respond to every email in the same day. It’s about processing your mail down to zero every day.

The thing about an overflowing inbox is that you never know what might be lurking inside those 1352 messages that you have as unread. It might be an important ask from a senior leader. It might be a change to your kid’s violin schedule. It could be a reminder that your car registration is set to expire. The uncertainty of what’s buried in those messages causes many of us a lot of subconscious stress. A feeling of being out of control begins to invade our psyche and we can never fully relax.

The goal of inbox zero is to reduce that stress not by responding to all of your email at once but by getting an understanding of what’s in your mailbox so that you can make a conscious decision about what to do with it.

The nature of email

When you think about emails that you receive, they really boil down into one of four categories.

- Something you need to do

- Something you need to know

- Something you need to have

- Garbage

The “something you need to do” category is probably the one we’re all the most familiar with. Knowledge workers get many of their tasks via email, whether it be an assignment from your direct manager or just the need to respond to the email because a question has been raised that you have the expertise to handle.

Something you need to know are those informational emails that sometimes turn into things you have to do. It might be a heads up that a particular meeting is occurring, a policy is changing, your kid has a change in soccer practice, etc. You don’t always have to do something in response to this knowledge sharing but there’s often a time constraint to it, which makes processing it in a timely fashion pretty important.

Something you need to have is really a riff on something you need to know. It’s the transmission of some data you need to have access to in the future. Think of spreadsheets, concert tickets, receipts etc. It’s important that you consider the likelihood of needing to recall this data later. The emailed receipt from your coffee visit probably doesn’t have much value, but the receipt from a shipping order could be useful up until you receive the item. It’s important to be critical about how you evaluate these types of emails or else everything can fall into the category of “something you need to have” and you become the digital equivalent of a hoarder.

The last category, garbage is pretty self-explanatory. Junk mail, chain letters from Aunt Isabelle, the 500 donation requests from your local political party etc. If it doesn’t fall into one of the categories I listed earlier, then chances are it’s junk.

Processing Email

As I mentioned before the trick to inbox zero is processing all of your email. By processing your mail and getting an understanding of what’s in your inbox, you can achieve some level of peace, because at the very least you know there’s not a time bomb waiting for you deep in your unread count.

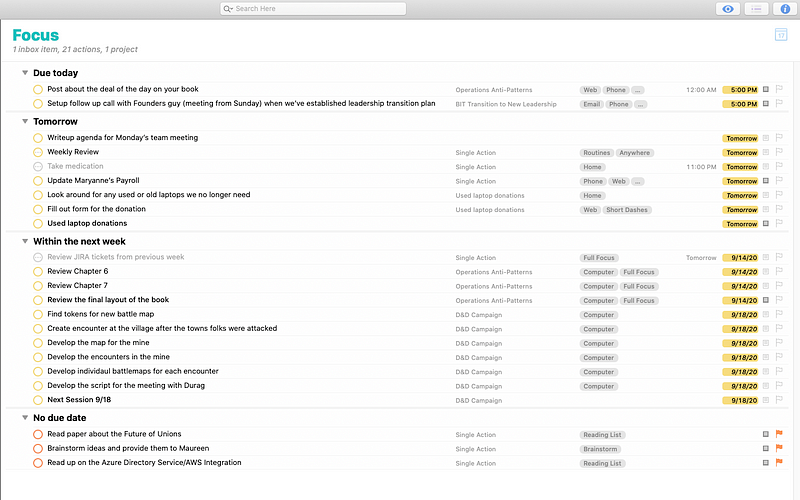

When you process your email, identify what type of email it is. If it’s something you need to do, ask yourself if you can accomplish the ask in a short amount of time. My personal limit is 5 minutes or less. Many people use 2 minutes or less as their limit. Whatever limit works for you, set it and take care of all messages that meet that criteria, whether it be performing a task or just responding to the email. If you can’t finish the task within your time limit, move the email to a “To-do” system or folder. I personally love the Getting Things Done methodology and have been using it myself for over a decade now. But no matter what your system is, the key is to move it out of your “Inbox” and somewhere dedicated to work that needs to be done. It might just be a separate folder in your mailbox or a more sophisticated solution like OmniFocus or Todoist. The key is to make sure it’s out of your inbox!

For things that are “something you need to know” or “something you need to have”, the same rule applies. Get it out of your inbox into something that’s more specifically for those types of things. I personally use DevonThink as a document storage manager. Anything I need to keep or store I put in DevonThink with a set of tags that’ll help me to retrieve it later. But you don’t need anything as robust as DevonThink. You can come up with a standard folder system in your mailbox or on your computer’s filesystem. If you intend to store files or mail on your filesystem, I recommend using a cloud storage option like Dropbox or iCloud Drive to make sure that you have access to your files on all of your devices. (If you’re interested in how I file documents, drop me a note and I’ll write a blog post on it) But again, the theme is to get it out of your inbox! Just the act of handling the message will give you the context necessary to decide if you need to deal with it immediately or not. You might process a note and realize that “I need to deal with this right now and then I can just delete the mail.” Or you might end up converting that “need to know” into “something to do” and transferring it to your to-do list. But if it sits in your inbox, flagged as unread, it will gnaw at your psyche and slowly drive you insane.

When it comes to garbage mail, I say that you need to be as ruthless as possible. Flag messages as junk so your mail client can learn what’s valuable and what’s not. Unsubscribe from newsletters that you don’t read with a passion. Opt-out of marketing emails that you inevitably get subscribed to when you make a purchase. Lastly, create email rules for those particularly stubborn mail senders that will route those mails directly to the trash bin when all else fails. You’d be amazed how much noise you can cut out when you’re diligent about keeping junk mail from hitting your inbox. You’ll never get all of it, but even a 30% reduction will have a noticeable impact.

When to process email

Another trap that many of us fall into is keeping our email client open all day. Don’t do it! I try to limit email processing to 3 times per day. In the morning when I start the day, in the afternoon after lunch and one final time for the end of the day. All other times, I try to keep my mail client closed. There’s one caveat to this approach though. If you use Outlook as your mail client, you might run into an issue where your calendar application and your mail application is one and the same. Closing out your email could also mean locking yourself out of calendar reminders, which is a deal breaker. It’s for this reason that I personally migrated to using the standard Apple Mail app and Busy Cal for email and calendar management. By having two separate clients, it’s easy for me to divorce these two tasks. If you’re stuck in Outlook, you might want to consider changing your fetch frequency to something longer. I avoided this approach, so your mileage may vary.

Keeping your mailbox open is a distraction as the notification bell continuously pulls your focus away from what you’re doing and sucks you into the drama of the mailbox.

Wrap-up

Inbox Zero may sound like a fantasy but I assure you it’s possible. When you decide to give inbox zero a shot, I recommend that you plan to spend an entire evening focused on processing your inbox. (Depending on how many emails you have to go through of course) Getting out of mailbox debt will be more time consuming than you might imagine, but it’s energy and effort well spent. If you have an overwhelming amount of email and you can’t fathom processing it all, there’s always the option of email bankruptcy where you concede to your mailbox, declare to folks that you won’t be responding to anything sent prior to this moment and you do a massive delete on all the mail in your mailbox. It’s a gutsy move but sometimes it’s necessary. But whether you get there through processing your inbox or declaring email bankruptcy, achieving inbox zero will give you the joy necessary to keep up with it.

Good luck!

-

The Office is Dead….Long Live the Office

The Office is Dead….Long Live the Office

Photo by Nastuh Abootalebi on Unsplash NOTE: The views expressed here are my personal views and don’t reflect the views or position of my employer.

The office is dead. It’s not dying or on life support, it’s just dead. The pandemic put it on life support and employers finished the job when they opened the doors to fully remote hiring. When teams became spread across the country, the purpose of the office died and it accelerated a shift that was bound to happen anyways. Remote work became the primary means of working and collaborating.

The value of the office

The real value behind the office was conformity, collaboration and indoctrination. Conformity had a range of ways in which it was exercised. Everyone had the same mouse, the same keyboard, the same monitors and this allowed companies to streamline the support process. In many offices, dress codes enforced a standard that would breed a set of attitudes. The more formal the dress code, the more professional the setting. Unless of course you were in Hollywood, Wall Street, Sales etc. But that was the theory anyway. How people worked, when they worked were all managed under the watchful eye of leadership, where too many coffee breaks were easily noticed. The loss of that conformity has sent many managers into a bit of a panic.

Collaboration was a huge hit in the office. No one will ever convince me that a video conference meeting is as effective as in-person meetings. When your best friend goes through a terrible breakup, you’d never say “Grab a beer and meet me on Zoom and lets talk about it.” No, you go to the bar and you have face to face communications about it. And if you can’t go to the bar, you have a Zoom call and complain about how much you wish you could be in-person doing the same thing you’re doing on a “just as effective” medium. If it’s not as effective for drowning your sorrows, it’s definitely not as effective for collaborating on complex topics, thoughts and ideas. Being able to share the same pen, on the same whiteboard without losing 3 seconds of audio every time you talk at the same time is priceless and something that I don’t think remote work will ever replace. That’s not to say that remote work is incapable of producing good collaboration, because it most certainly can. It just takes more dedication, planning and participation by all attendees.

Indoctrination is another hard one. Replicating a culture remotely is a challenge that I think many companies are struggling with. It’s hard enough to build a culture in person. We lose the rituals of culture building as they get lost in translation to video conferencing. The activities that resonated in-person don’t resonate through the lens and we’re still trying to figure out how to replace them. I think we’ve all learned that a lift-and-shift of cultural activities doesn’t work. Sharing a team lunch on camera isn’t the same and quite hoenstly turns into a disgusting affair really quickly.

With these three things listed, there’s one thing that’s a bit of a glaring omission and that’s work. The office has never been a place where “work” is super efficient. You’re constantly bombarded with distractions, drive-by visits, unexpected delays in your commute just to name a few. For many people, the office was the only option for work, so its effectiveness was never deeply considered. Prior to the pandemic, most people hadn’t transformed a space in their home to be effective work from home (WFH) employees. Those employees that were already fully remote learned this secret a long time ago and have been reaping the productivity gains ever since. But people new to the WFH game had to figure it out on their own. Many of us still don’t have a great work from home setup due to space constraints. Those people are probably longing for the reopening of the office, but they’ll find that what they come back to is just a shell of its former self.

The flood gates of remote hiring

Companies have been desperate to hire the last year or two. Many clever companies decided to open the flood gates and start hiring remotely. Even yours truly was a staunch supporter of the in-office lifestyle. I preferred people in the office, collaborating and working together, drinking the indoctrination kool-aid. But I also sensed the winds changing. Now 4 out of my last 5 hires have all been remote. My team is almost 50% remote now and honestly, one of my employees has such a long commute he might as well be considered remote.

The point being, I will never return to a world where everyone is in the office. It’s just not possible. Even if we forced the local people back into the office and allowed the remote people to stay remote, it creates an even worse scenario. Your team becomes bifurcated as they self-organize into remote and in-person silos. Yes, we should be doing everything in slack and yes, we should make sure every meeting has a Teams invite. But if you can have a meeting with three people in the office and one remote person, human nature dictates the path of least resistance and that four person meeting gets cut down to a three person meeting real fast. Guess who gets dropped.

Same with the remote people. You’re trying to collaborate with people remotely and even though you have a great microphone, solid lighting and an HD camera, you’re working with a bunch of people trying to figure out the antiquated video conference software in the room. The microphone sucks so you can’t hear Joey, who always sounds like he’s talking at a funeral. Mary gets up and starts doodling on the whiteboard, forgetting that you can’t see the whiteboard. Now we spend 10 minutes adjusting the camera to point at the white board, only for it to pan back to the first person who starts talking. Yeah, you’re familiar with this nightmare. There’s no need to rush back to it.

If everyone is remote, everyone is on the same playing field. And while it may not be as effective as everyone being in the office, it’s way more effective than having some people in the office and some people remote. If you’ve started down the path of remote hiring, the current incarnation of your office is dead.

The Future of the Office

The office was never about work. But now we can’t even afford to pretend that it’s about work. Most of us have created a work from home setup that rivals anything the office would provide. It’s tailored to our needs and our tastes. When we go to the office now, it’s a step down in every category. Even my wife has a 34" widescreen monitor now. People are spending major dollars on their chairs, their keyboards, their mouse because now it’s an investment. The occasional Friday at home didn’t warrant the investment we’re willing to make now that it’s 5 days a week. And that means companies are either going to have to adjust their budgets and their equipment flexiblity or they’ll need to find another way to entice people back to the office. It’s hard to compete with great ergonomics, beautiful displays and a 2 minute commute.

The office still rules in the areas of indoctrination and collaboration. Collaboration has to be intentional though. For starters, every meeting space has to treat remote workers as first class citizens. That means solid audio and easy to use camera setups that are well maintained. The tech in these rooms has to be designed with these hybrid meetings in mind. I don’t think it will ever be as good as being in-person or everyone being remote, so these items are tablestakes just to get people considering a trip downtown.

Where the office will truly shine though is in collaboraton between people who normally don’t collaborate. Building relationships and strengthening netowrks is the place where the office still has clear dominance. For example, I went into the office a month or so ago for a meeting run by our facilities team. I don’t work with the facilities team often and certainly not since going full remote 2 years ago. But it occurred to me that I talked with Jan (our facilities manager) every day prior to the pandemic. Why? Because we would always cross paths in the kitchen and strike up a conversation. The kitchen was a sort of central access room and because of the respective locations of our desks, we would constantly find ourselves running into each other in the morning and afternoon. A happy little accident.

The office was great for building these sorts of connections. But now we’ll want to be more deliberate. How do we construct the office space to generate these common access patterns. Do we need to rethink the idea of these silo’d teams, isolated to one specific spot in the office? How do we design the layouts to encourage and entice foot traffic from many different groups, creating the conditions for the happy little accidents Jan and I shared for over 4 years? The office needs to look very different than how we left it, with these thoughts front and center.

The need for networking becomes apparent when you have more than 4 people from the same department in the office at the same time. The desire for human contact is palpatable. When I go to the office, I spend more time talking and catching up than I do working. Sure that will die down with the frequency of office visits increasing, but the long tail on that is probably bigger than you think.

Many companies aren’t considering a five day a week return to the office schedule. Some are doing the three days in the office, two days remote or a version of that. But many companies don’t dictate who works on what days, so you might not get the colalboration (and therefore the productivity) that you were expecting because people aren’t in the office on the same days. That also means you’ve got a much larger number of personnel combinations, meaning you might not see the same sets of people all the time.

Networking and specific collaboration events is where the office will prove its value. But with a shift away from “where work happens” the office design and layout also needs to change. New tools need to be brought to the office to streamline the in-person/remote collaboration efforts. If you’ve still got people pointing their laptop web cams at the whiteboard for the remote folks, you won’t succeed in this new era of office work. We’ll need to rethink how people are grouped together for work as well. How do we entice the chance meetings that were fueled by common spaces such as the lunch and coffee areas? Does grouping people by departments still make sense if the office isn’t about general work getting done?

These are all questions that we’ll need to ask ourselves as we figure out where the office fits into corporate life. I believe the office does still have a future but only if we rethink its purpose and the organization’s commitment to that purpose. You’re not going to lure people back to the office with snacks and unlimited soda. The office will have to offer something that can’t be found at home. People and their need for interaction both personal and professional will be the cornerstone of the weekly office visits.

-

Treating OPS Teams Like Product Teams

Platform as a Product

Photo by Arno Senoner on Unsplash Operations is one of those areas that many people in the company struggle to fully understand. The depth and breadth of responsibility varies per organization, with production support being the only thread you find consistently in companies. (And even that is changing with you build it, you run it becoming popular)

But infrastructure operations is too important of a component to be relegated to the annals of cost-center accounting. Smart organizations understand this and invest heavily in Operations teams. My job as a leader is not only to evangelize what it is we do, but to tell a story that’s relatable to stakeholders so that they understand how our role impacts their day-to-day lives. Most people don’t spend a lot of time thinking about disaster recovery, high availability or even ongoing maintenance of the things we build. Everything that is built and operating has some sort of maintenance cost associated with it. For many, it’s easy to think once a product is launched, it just exists on its own with no real need future management. But software is never finished, just abandoned.

With this lack of clarity on the value my team brings, I’ve been working through different ways to more effectively evangelize what it is we do. This led me to the idea of managing the Operations team like a product team, using similar techniques, roles and producing similar artifacts as part of how we manage what we do.

Over the next few months I’ll be working to make this shift within my team and chronicling some of the experiences we have, the challenges and the thoughts around this transformation. I’m still working on a way to tie these things together into some sort of easily searchable series, but know that this won’t be the end of the conversation. I’ll have some sort of tag to use across the entire series.

What’s the Product?

The first thing I had to ask myself when I cooked up this idea is, Exactly what is the product that I’m “selling”? That question was actually easier to answer than I thought. At Basis we’ve been pretty adamant about building as much of a self-service environment as possible for engineers. Unfortunately the self-service approach never took on a polished, holistic view the way a product would. We would solve problems using a familiar set of patterns, but we’d never actually think about them from the perspective of a product. What you end up with is a bunch of utilities that look kind-of the same but not enough for you to make strong assumptions about their behavior, the way you might with say Linux command line utilities.

With Linux command line tools, whether you realize it or not, you make a bunch of assumptions about how the command functions. Even if you’ve never used the command before in your life, you know that it probably takes a bunch of flags to modify the behavior of the script. The flags are most likely in the format of “- or –”. You know that the output of the command is most likely going to be text. You know that you can pipe the output of that command to another command that you might be more familiar with, like grep. Leveraging these behaviors almost becomes second nature because you can count on them. But that didn’t just happen naturally. It took a deliberate set of rules, guidelines expectations etc. This is what’s missing from my team’s current approach to self-service.

So back to the original question of “What’s the product?” I’ve been working on a definition that helps to frame all of the subsequent questions that follow, like product strategy, vision etc.

What is the product?

A suite of tools and services designed to support in the creation, delivery and operation of application code through all phases of the software development lifecycle.

It’s a bit of a mouthful at the moment and I’m still tooling around with it a bit but I think it’s important to conceptualize what the product is that Operations is selling. The best analogy I’ve been able to come up with is the world of manufacturing.

As an inventor or product creator, you might design your product in a lab, under ideal conditions. But you have no idea how to mass produce it. You have no idea how to source the materials effectively for it. You have no idea the nuanced problems that your design might create when you’re attempting to create 200,000 of whatever you created.

If you’re a solo creator, you’d probably start talking to a manufacturer so that you can leverage their expertise as well as their production facilities to help turn your dream into a reality. If you work for a large enough company, you might have your own internal manufacturing team that specializes in various types of product creation. This is the analogy for operations. We take application code that the developers have created, then using our infrastructure and processes, get it to a state that’s production ready.

I’m sure given a little scrutiny this analogy will show some holes, but I think it does a good job of at least getting people in the mindset for viewing infrastructure and the supporting services as a product. The final product of a manufacturing line is a blend of design and production, similar to the way the quality of the application is a blend of design and production.

How does this change the way we look at ProdOps?

I can imagine many people are reading this and thinking “What’s the big deal, so it’s a product. How does that change anything?” Depending on your team, it might not change anything. But for many groups, once you start looking at operations as a product team, it really starts to change your perspective on the management of your infrastructure. But most importantly, if we get our minds right, thinking about Operations as a product opens us to a world of best practices, workflow management techniques, reports and communication patterns just to name a few. A perfect example is the idea of user personas.

In the operations world, we have a vague idea of who are “customers” are internally. Not only that but we have a very specific idea of what a developer should know and care about. Our expectations manifest themselves on how we interact with developers. Our forms, our workflows our RTFM approach, all are based on our elevated expectations of developers. But if we approach this from a product centric viewpoint, we’re forced into a customer centric viewpoint as well. Nobody would tell their customers “you should just be more sophisticated” or “you should just know that”. It wouldn’t be great for sales. This is one of the reasons why product teams develop user personas as a way to represent their target customer. They might even create multiple user personas to represent the breadth of their potential customer base, as well as how those personas might use the same tool differently than other customers. User Personas are in no way revolutionary, but thinking in terms of a product makes their adoption in an operations setting a much more natural transition.

Wrap up

As I mentioned previously, this is really a big experiment on my part. At the time of this writing, I’m very early in the process. But I hope to use this blog to share parts of the journey with you. Hopefully you’ll be able to learn from some of my missteps.

In the next part of this series, I’ll be writing about the establishment of the product vision, product strategy and product principles and how they play their parts in building the roadmap for the Operations infrastructure.

-

Organizing Tickets for OPS Teams Part 2

Organizing Tickets for OPS Teams Part 2

Photo by Alvaro Reyes on Unsplash In my previous article I laid out some of the ground work for how I setup my team’s workflow management. In this article I’ll go a little deeper, specifically around ticket types and my labelling process in order to get more data from our ticket work so that I can effectively manage the team.

Ticket Types

As previously mentioned, my team uses JIRA for ticket management. Any ticket system worth a damn will have some concept of ticket types so the lessons presented should still be applicable. I’ll be writing directly about my JIRA experience, so your mileage may vary.

The first thing when considering what ticket types to create is how I want to report on this data in the future. If I don’t care about the difference between a Defect and a User Story, there may not be much value in separating the two ticket types. With reporting in mind, I go about laying out the different ticket types I want as my first layer of reporting.

- ProdOps Tasks — This ticket type is designed for end users (developers, QA staff, etc) who need support from my team for something that is need in “quick” fashion. Quick might be minutes, it might be days but the important thing is that it can’t wait for the normal iteration planning process of my team to happen. This is interrupt driven work. As a result, the workflow for ProdOps tasks has these tickets skip over the backlog and land directly into the Input Queue.

- Stories — These are larger requests that are going to take time, planning and effort. They might come from customers (again, developers, QA staff, product owners etc) but they’re often generated from within our team. Stories are always capable of being scheduled and therefore go directly to the Backlog upon creation.

- Defects — When a piece of infrastructure or automation that my team supports isn’t working as intended but is not blocking a user’s ability to do their job, we mark this as a defect. An example might be that our automation does an unnecessary restart of the Sidekiq Service, which results in a longer environment creation process. It is a pain for sure, but the user will live. It’s still something we should address, hence the defect ticket. Defects go directly to the backlog.

- Incidents — When a problem is occurring, there’s no workaround and there’s a direct impact to a group of people’s ability to work, that’s considered an incident. An incident exists regardless of the environment it happens in. (No matter the environment, it’s always production for somebody) Incidents skip the backlog and go straight to the input queue. Incidents are often generated automatically via PagerDuty since all of our alerting happens through the Datadog/PagerDuty integration.

- Outage — When we have large system wide outages we create an outage ticket to track the specifics of the larger impact. Because incidents are generated by alerting, when there’s an outage we will often have multiple incident tickets that are all related to the same problem. The outage ticket allows us to relate all of those tickets to a master ticket, as well as use the outage ticket to track the specific timings and events of the larger incident. Outage tickets are generated manually at the declaration of an outage.

- Epics — I use epics to tie multiple stories into larger efforts. I also use epics as a way to communicate what the team is working on in a higher level fashion to my management. My boss doesn’t care that we’re working on moving away from the deprecated “run” module in Salt Stack. (That’s too low level) Leadership wants larger chunks of work to understand what’s happening on the team. Having an epic with a business level objective at its definition is much easier for leaders to follow and understand.

Each of these ticket types were created with two primary things in mind. * How do I want to report on tickets? * How do I want these tickets to behave as it relates to the backlog and input queue?

How do I want to report on tickets?

I create the ticket types based on how I want to report. ProdOps Task tickets were created to get an understanding of not only the demands that other teams are placing on my team but the urgency of those demands. This might be something material like “Need help with a new Jenkins Pipeline” to something routine like “New hire needs access to Kubernetes.” Having these types of requests separated into their own ticket type allows me to very easily create reports around them. (Even with JIRA’s horrible reporting abilities)

Stories and defects when compared to incidents and prodops tasks allow me to get a sense for how much planned work the team is doing versus work that bullies its way into the queue and demands our immediate attention.

Something to consider about ticket reporting. Reporting can be an inexact science. Much of it is subjective when you start looking at the details of a ticket. The thing to keep in mind with this sort of reporting is that we’re looking at the data for themes not for precision. Do I care that I had 3 tickets get categorized incorrectly as defects? Not when 60% of my tickets are defects. The 60% number (if true) helps to draw my focus. When it comes to reporting, look for a signal, but then validate that signal. Don’t just assume the data is accurate and start making changes. It’s just too difficult to keep the data completely accurate, so you should always look at your ticketing reports through that lens.

How do I want these tickets to behave as it relates to the backlog and input queue?

Tickets that are too urgent to go through the planning and prioritization process need to be made available to the team for work immediately. By creating those as separate ticket types, it’s easy for me to create a different workflow that allows these tickets to jump straight into the Input queue. I can also add functionality to flag these items or take other actions to raise their visibility to the team. But the ticket type drives my ability to handle them differently.

Different ticket types for end users to leverage also makes it much easier for them to interact with us as a team. Almost exclusively we tell our users to create their tickets as ProdOps Tasks. The majority of the time, they’re items that need to be addressed sooner rather than later. In the cases where their tickets actually can be scheduled we just convert the ticket to the appropriate ticket type (based on our reporting needs) and we move it to the backlog for the next planning meeting. This removes the anxiety of choosing the wrong ticket type from the user. Create it as a ProdOps Task and we’ll do the rest.

Ticket types can go a long way in helping you to create meaningful reports on the activity of your teams. It also gives you a way to slice your workload to see how different areas are impacted. The average time to close a ticket might be 14 days but then you find out that if you separate that by ticket type, the incident tickets are the outlier for resolution time. Maybe your team isn’t consistent about closing those particular ticket types for some reason. Or perhaps the automation that you use to resolve the tickets through monitoring isn’t setting the “Resolution” field on the ticket appropriately.

Sometimes though you want a level of reporting that goes beyond what ticket types allow for. This is where I use labels.

Using Labels for Reporting

Labels are pieces of metadata that you can add to tickets to give them a bit more description. The beautiful thing about labels (and metadata generally) is that they’re so flexible. The horrible thing about labels is that they’re so flexible.

The reporting on labels in JIRA isn’t the greatest, but the pain of pulling this data into a separate tool and figuring out the JIRA data model is much higher than just dealing with the reporting shortcomings, so here we are. When it comes to labels, relying on team members to always label tickets has varying levels of success. Some team members will be extremely diligent about it while others will be more lax. It’s good to have a process where you can validate that labels have been applied to tickets appropriately.

The issue I find with labels is that it can be difficult to know whether the label is just missing on a ticket or if that ticket doesn’t meet the criteria for the label. In order to combat this, I’ve designed my label strategy so that I understand what my label is trying to communicate and I ensure that the positive label (i.e., this ticket matches that criteria) has an opposite label, denoting that it doesn’t meat that criteria. For example, a label that I want all my tickets to have is whether the ticket was a PLANNED ticket, meaning the team decided when it would be done versus an UNPLANNED ticket, which had its schedule forced on us for one reason or another. Instead of just having a “PLANNED” label for those tickets, we also use an “UNPLANNED” label for the others. This way I can always know if a ticket was processed or not (for this criteria at least) because it should have one of these two labels.

Processing Tickets for Labelling

For the labels that I absolutely want to ensure every ticket has, I create filters to identify tickets that do not have those labels. For example, my planned/unplanned filter looks like this:

project = "Prod Ops Support" AND created >= startOfYear() AND (NOT labels in (UNPLANNED, PLANNED, TEST-TICKET) OR labels is EMPTY)This will give me a list of tickets that haven’t been labeled yet. Using the Bulk Change tool, I can quickly scan through the tickets and “check” the items that I considered UNPLANNED. With the Bulk Change Tool I can then select each ticket that I want to add the label to.

JIRA Bulk Edit Tool After going through the Bulk Edit wizard and adding the label the query should now return fewer results, since we’ve updated all of the UNPLANNED tickets. Now we can select all of the remaining tickets and add the PLANNED label to them. Repeat the same process with the Bulk Change tool and you’re good to go.

NOTE: Make sure you disable notifications for your bulk edit change. Or lots of people will be frustrated with you

I repeat this process for all label sets that I want to add. Each label set has a query similar to the one I used for PLANNED/UNPLANNED tickets which allows me to quickly identify tickets that need to be processed.

Another label pair I add is TOIL/VALUEADD. This identifies which tickets are work that we shouldn’t be doing as a team and need to automate or transition to another group. An example of TOIL work would be user creation.

All of this might sound like a lot of work, but I assure you I spend no more than 15 minutes per week doing this type of labeling work. I do it on Monday mornings every week in order to keep the volume relatively low. And again, the aim for me isn’t 100% accuracy, but to get the broad strokes so that I can see the signal start to bubble up.

Wrap Up

Now that I’ve explained my ticket types as well as my labeling process we can discuss the different types of dashboards that can be built in a future blog post.

-

Change is Scary, Even When It’s Fun

Change is Scary, Even When It’s Fun

Second-order thinking can help us evaluate the consequences of our consequences

Woman walking in front of a sign that reads Let’s change One thing I’ve learned since having children is just how early in life many of the faults in humanity show up. Children are reflections of ourselves but in the purest form. When children reveal behaviors like greed, biases and violence, it makes you start to view these behaviors as a natural part of human nature that can only be controlled through societal norms.

I say these things to prepare you for the fact that you are not immune to these behaviors. None of us are immune to biases and it’s easy to accept the reality our biases create. Biases can also hide our true motivation for taking (or not taking) a course of action.

My children are at the age where we can play video games together, which is way better than playing with Paw Patrol action figures. The artificial world of video games is starting to reveal the dark underbelly of human behavior. When I see this darkness manifest in my children, it makes me look at these behaviors critically. In this post I’ll talk about an experience I shared with my daughter Ella, who is 10 years old, and the parallels I see in the workplace.

The Video Game

Satisfactory is a video game where players work together to extract resources from an alien planet and build various components out of those resources for their employer FICSIT. To do this the players move through a series of improving capabilities and skills that allow them to build factories to automate a lot of this work. Factories are a collection of machines that automate tasks in a pipeline like fashion to start with one type of input (e.g. iron ore) and at the end of the pipeline have a type of output, like iron rods.

One of the key tasks early in the game is making fuel for your generators. Generators are used to power the other components of your factory. My daughter Ella’s very first factory was created to produce Bio Fuel , which is the most efficient type of fuel in the early stage of the game. In order to make bio fuel, Ella created a factory pipeline that would take leaves and grass, convert that into bio mass and then take the bio mass and convert that into bio fuel.

When she built the factory, she had the idea of keeping 50% of her bio mass as-is and storing it, and then sending 50% of the biomass down the pipeline to be converted to bio fuel. Early on this technique made sense but over time we realized that anything that would take bio mass as a fuel source, would also take bio fuel as a fuel source. The difference is that bio mass burns a lot faster, so a generator might consume 18 units of bio mass per minute, but would only consume 4 units of bio fuel per minute for the same power output.

Recommending change to the way things are done

Once I realized that bio fuel could be used in everything, I suggested to Ella that we just focus her factory on creating bio fuel instead of storing 50% of our bio mass as is. With many different factories running, you can spend a lot of time making sure your generators are fueled. Having to fuel them less often is a huge productivity boost for your game play. To my surprise, Ella was very resistant to the idea. Like any proposed change in any setting, Ella had a laundry list of defenses for why things should remain the same.

“We might need bio mass later in the game” was her first retort. A fair one for someone not familiar with these types of gameplay loops. But I leaned on my 20+ years of experience playing these types of games to try to rationalize with her why this isn’t likely. I explained how the game play progression typically has us moving forward and that it wouldn’t be long before we probably wouldn’t be using bio fuel either. And bio mass is so easy to acquire that it wouldn’t be a problem if we needed to build a new factory later. But sometimes experience isn’t convincing to people.

“But there’s no downside to us just storing it” came next from her. That’s true, except it’s horribly inefficient. We almost never opt for bio mass, unless we’re out of bio fuel. And often what would happen is the storage container we used to store the bio mass would fill up, which would force us to convert it to bio fuel anyways to make space in the container. But this was a manual process, so again it hit our productivity and the productivity of the factory as a whole. Inefficiency though can get so embedded in the process that people just live with it because it seems easier than the alternative.

“Bio mass is just as good as bio fuel.” Here’s a scenario where data I thought would surely win the day. As I mentioned earlier, the game tells us the burn rate of fuel types. Bio mass burns 18 units per minute while bio fuel burns 4 units per minute. Each generator can accept a stack of 200 of either fuel type. Doing the math means we need to refill bio fuel generators every 50 minutes, but bio mass generators roughly every 11 minutes. I thought the data would make this an easy conversation, but if you work in any office setting, you probably already know where this is going.

“I don’t know if that data is right.” Now she challenges the validity of the data provided by the video game developers. I don’t know if she’s thinking there’s a global conspiracy against the bio mass industry or if the developer is staffed by activists pushing an agenda. She claims that when she watched the burner it felt like they burned around the same amount of time. Now I’m starting to lose my patience a little bit.

“I just don’t think it’s worth changing the entire factory for this.” We’re finally getting to the root of the issue now! She just doesn’t feel like doing the work. I don’t think the work is actually that much but I’m a bit more experienced than she is so I can see how she might think it’s a bigger task. I offer to do it for her. Finally the last wall of resistance crumbles. She agrees to the change and decides she’ll implement it as soon as she finishes a few high priority factory tasks, ironically one of which is refueling a bunch of bio mass burning generators.

Implementing the change

Ella implemented the change in the most efficient manner possible. The conveyor belt that carries the bio mass goes into a conveyor belt splitter, sending half the bio mass to storage and half the bio mass to be created into bio fuel. She opted to just delete the conveyor belt that would have shipped the bio mass into a storage container. One minor tweak and suddenly the reality we were fighting about had finally come to fruition. We’re only producing bio fuel and we’re producing it at a much higher rate because the delivery of bio mass is now 50% faster. (Since we’re no longer splitting it)

If you’ve been reading this from the perspective of an employee at a company, a lot of this probably resonates with you. Remove video games and replace it with whatever it is your company does, and you’ve probably had a lot of these very same conversations with co-workers. And it’s easy to assign laziness, ambivalence, lack of empathy or any other host of adjectives to describe that co-workers work ethic.

The case with my daughter is the ideal scenario. The entire exercise was one of fun and recreation. The work that needed to be done was literally part of the game loop, the very thing that makes the game fun. The task was completely owned by Ella from beginning to end, so she could implement it any way she wanted. Despite all these things going for it, resistance still crept in. Why? Because it’s not the work, it’s the change.

Change is a funny thing for some people. It brings in uncertainty and doubt for the future. The devil you know versus the devil you don’t. Dealing with the inefficiencies of the current factory was a lot easier for Ella to get her head around than the potential problems that could be created by redesigning the factory from the ground up. What if she ran out of materials during the rebuild? What if she couldn’t get the pieces lined up properly? What if we ran out of fuel in our generators while the fuel factory was being rebuilt? I’m sure all these things were swirling in her mind at a subconscious level, which then consciously manifested themselves as resistance to change, with a set of adopted biases to justify that change. Confirmation bias is what happens when we interpret information in a way that confirms or supports a set of prior held beliefs. It’s what allowed Ella to replace hard data with her general feeling of how fast fuel burned. Keeping an eye out for when we might fall victim to confirmation bias is a part of being “data driven”. I put that in quotes because many people and organizations are “data driven as long as it supports what I wanted to do anyway”, which isn’t exactly the same thing. Confirmation bias plays a huge part in that mindset.

Chesterton’s Fence

Another observation I had made was how the factory was left in this modified state that might not make a ton of sense to the next set of factory workers. With the intent of the factory going from making bio mass and bio fuel, to just making bio fuel, many of the components of the factory don’t serve a functional purpose any more. We have a conveyor belt splitter that doesn’t split to anything. We have a storage container that isn’t connected to the factory at all any more. We have an extra storage container in the pipeline that doesn’t make sense with just a single fuel type being produced. If I were a new employee at this factory, I’d be a little baffled as to why these things exist. This made me think of Chesterton’s Fence and how it plays in our comfort levels when making changes.

Chesterton’s Fence is a concept of second order thinking where we not only think about the consequences of our decisions, but the consequences of those consequences. The phrase comes from the book The Thing by G.K. Chesterton. In the book, a character sees a fence but fails to see why it exists. Before removing the fence he must first understand why it was there in the first place.

As a new factory worker who is trying to make the fuel factory more efficient, I might be confused by these extra components scattered about the system. What if they had a purpose that I’m unaware of? What would removing these things from the system do? Their uselessness seems so obvious that it almost makes it even more daunting of a task to remove it because you have no idea why it exists.

This is a common problem we see with hastily implemented changes. The change is designed to deliver the value needed now as quickly as possible but sometimes at the expense of clarity for future operators of the system. Thinking about the consequences of our consequences can create a more sustainable future but at the same time, put more work on our plates in the present.

Wrap up

This post ended up going on way longer than I expected and if you’ve reached the end you deserve a cookie or a smart tart or something. The parallels in behavior between my video game-playing daughter and senior people in large organizations is startling. The truth is these behaviors are our default state of mind. Only with the awareness of our faults can we improve.

Some key takeaways from this lesson for me are:

- Biases exist early on in life and you’re not immune to them.

- Keep an eye out for confirmation bias. It can make you believe some crazy stuff

- People fear change, even in the most optimal of situations.

- Second-order thinking can help us evaluate the consequences of our consequences. It also pressures us to understand the intent behind something before we go about changing it.